Stay up to date on the latest happenings in digital marketing

Summary

-

Ensure Data Accuracy: Marketing professionals should implement a structured approach to data validation, such as breaking tasks into smaller, manageable parts and enforcing strict schemas, to prevent AI-generated inaccuracies (hallucinations) that can lead to misguided decisions.

-

Leverage Advanced AI Capabilities: Utilize AI integrations that prioritize clarity and simplicity in data reporting, allowing for quick, reliable insights without unnecessary complexity, thus optimizing campaign performance and decision-making speed.

-

Continuous Evaluation and Improvement: Establish robust monitoring and feedback mechanisms to evaluate AI outputs regularly, ensuring the system evolves and maintains high-quality, actionable insights that align with real-time market conditions and consumer behavior.

You want marketing data. You want it fast. So you get a little help from your silicon friends. But is it right? Will AI tell you that you have 5 million app installs on Venus? Will your favorite LLM report -3,000 conversions in your top-performing Match-3 game? In other words, will using AI result in bad data thanks to a hallucination?

Hallucinations are a fact of life with modern LLM-based AI systems.

It’s smart to be worried about them.

That’s something that we’ve taken a great deal of care to avoid with our new MCP integration with Anthropic’s Claude.

- You don’t want to be that lawyer who used ChatGPT to draft a brief that referenced non-existent case law

- You won’t want to be that airline whose chatbot invented a compassionate refund policy that didn’t exist

- You won’t want to be that real estate company that advertised ocean views in Kansas, thanks to an LLM that confused 2 similar-looking city names

(Yeah, all true stories, by the way. I know because ChatGPT told me about them.)

You want to be the marketing hero who gets the right information and makes the right decision and delivers measurable growth. But you need that data. You need it quick. And a couple sexy charts wouldn’t hurt, too.

But it needs to be correct. Accurate. True. Actionable.

Plus, not something you’ll get fired for.

Zero hallucination AI-generated marketing insight

So how do we avoid hallucinations with our AI-generated marketing insight?

There’s probably no completely perfect, 1000% safe solution here, but we’re seeing amazing results with really high-end accuracy with a multi-pronged 7-step approach:

- We eat the elephant 1 bite at a time

We break every user question into tiny, fully tested tasks. Each task handles just one piece … dates, metrics, dimensions, filters, breakdowns, you name it, and then runs in parallel. Breaking down tasks this way lets the model focus on 1 thing at a time, lets us validate each step, and cuts response time while also boosting accuracy. - Strict schemas

We enforce strict schemas for every task. If a start date comes after an end date or a metric name is unknown, the flow stops immediately and returns a clear error instead of inventing data. - No guessing allowed

Like a highly-secure competent person, we allow the model to admit uncertainty. When the required information is missing, the LLM can answer “I don’t have enough information,” preventing creative guesses. - KISS: keep it simple, stupid

We keep every prompt lean. We skip tasks your desired answer doesn’t need, like filtering when the user didn’t ask for it. That reduces noise, latency, and token use. - Small is beautiful

We design each model call to do just 1 small job: nothing more. Clear, single-purpose calls prevent prompt injection, keeping outputs predictable. - Observability, logging, daily evaluation

We ship with full observability and daily evaluation loops. Every run is logged and replayable, and a mix of unit tests, integration tests, and an automated LLM-as-a-judge checks the model against key metrics, so quality improves over time. - Friendly failures

We handle failure gracefully. If something goes wrong, users see a plain-language suggestion like “Try a shorter date range” instead of some cryptic stack trace.

Add it all up, and you get trustworthy dashboards that match what you’d see if you pulled all the data the old-fashioned way in Singular manually.

It also speeds up your responses, because small tasks executed in parallel via smaller prompts deliver answers in seconds.

Extra bonuses:

- Future-proofing

More LLMs are delivering MCP integration capabilities. With all these steps, the guardrails remain as we onboard additional partners. - Peace of mind

The lawyers who argued their case on non-existent precedents got sanctioned and fined. With all these checks and balances, you can be confident that the data you’re getting from your AI helper is secure, validated, and keeps surprises to a minimum.

Sum it all up, and our MCP integration plus our data integrity best practices helps us provide accurate, grounded data that is continuously validated.

That means your plain English questions transform into reliable performance insights. No hallucinations, just numbers you can count on.

Usage is off the charts

Now’s a good time to mention, by the way, that we had no idea how many of you were just waiting for this kind of solution.

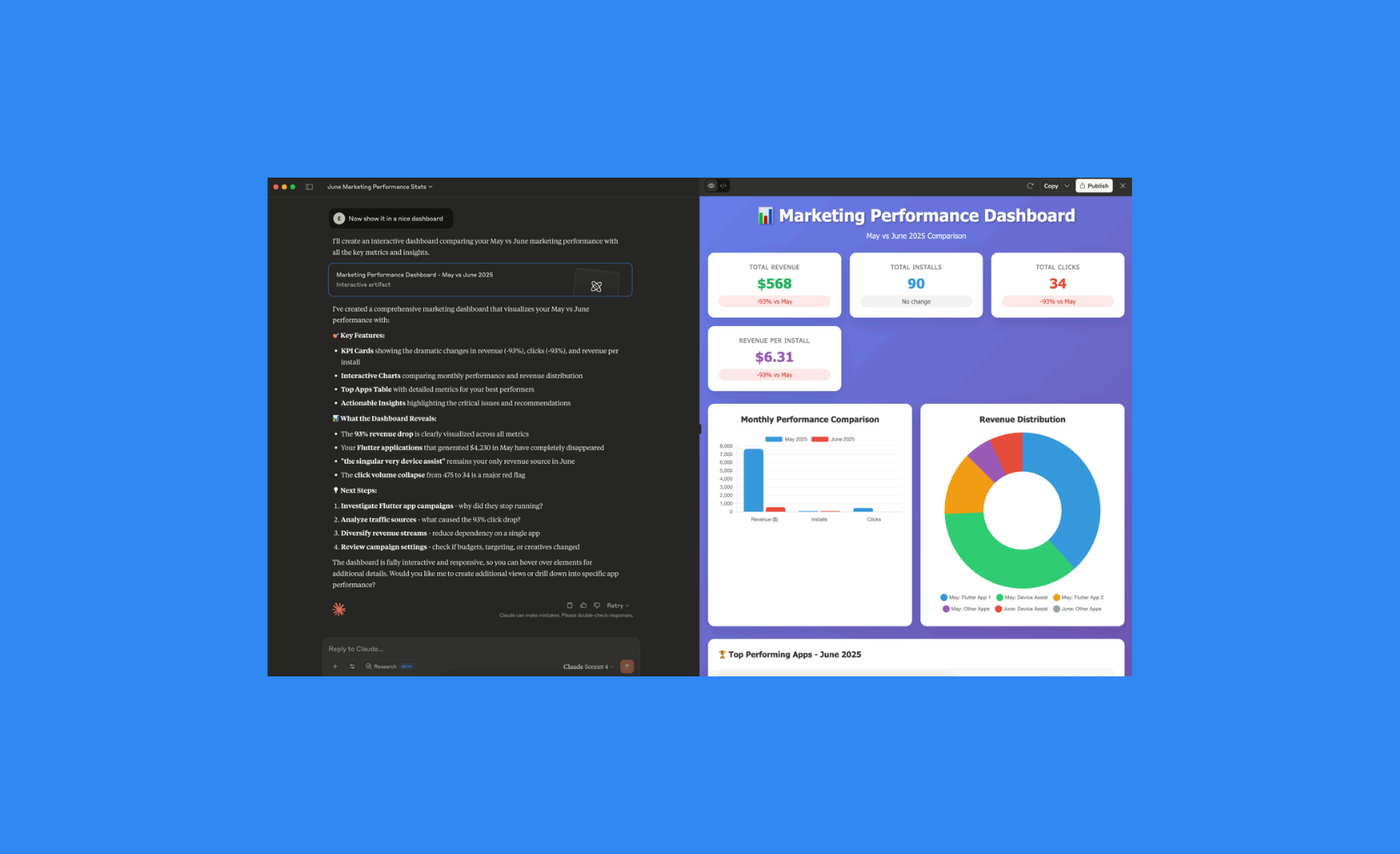

Usage is completely off the charts. Here’s what it looks like in a demo account:

There’s literally a CTO of a major mobile gaming company who is using this daily. (I mean, we expected the non-technical people to jump on this quickly, but it turns out that the nerds among us also like quick and easy data.) The charts that Claude is almost instantly creating for him are very cool too: total installs, total cost, total revenue, LTV, ROI, average DAU, ARPU, ARPDAU … you name it.

And it’s not just the data.

Thanks to the magic of today’s super-smart LLMs, people are getting insights, not hallucinations:

- Recent change: lower volume but higher quality

- New: massive improvement in profitability

- Higher UA cost in the last month

- Better monetization in the last month

If you haven’t tried it yet, check it out.

Any questions, talk to your Singular rep. And, if you’re not yet using the best MMP in the world as rated by marketers, there’s no time like the present.